Do text-free diffusion models learn discriminative visual representations?

In Proceedings of the European Conference on Computer Vision (ECCV), Sep 2024

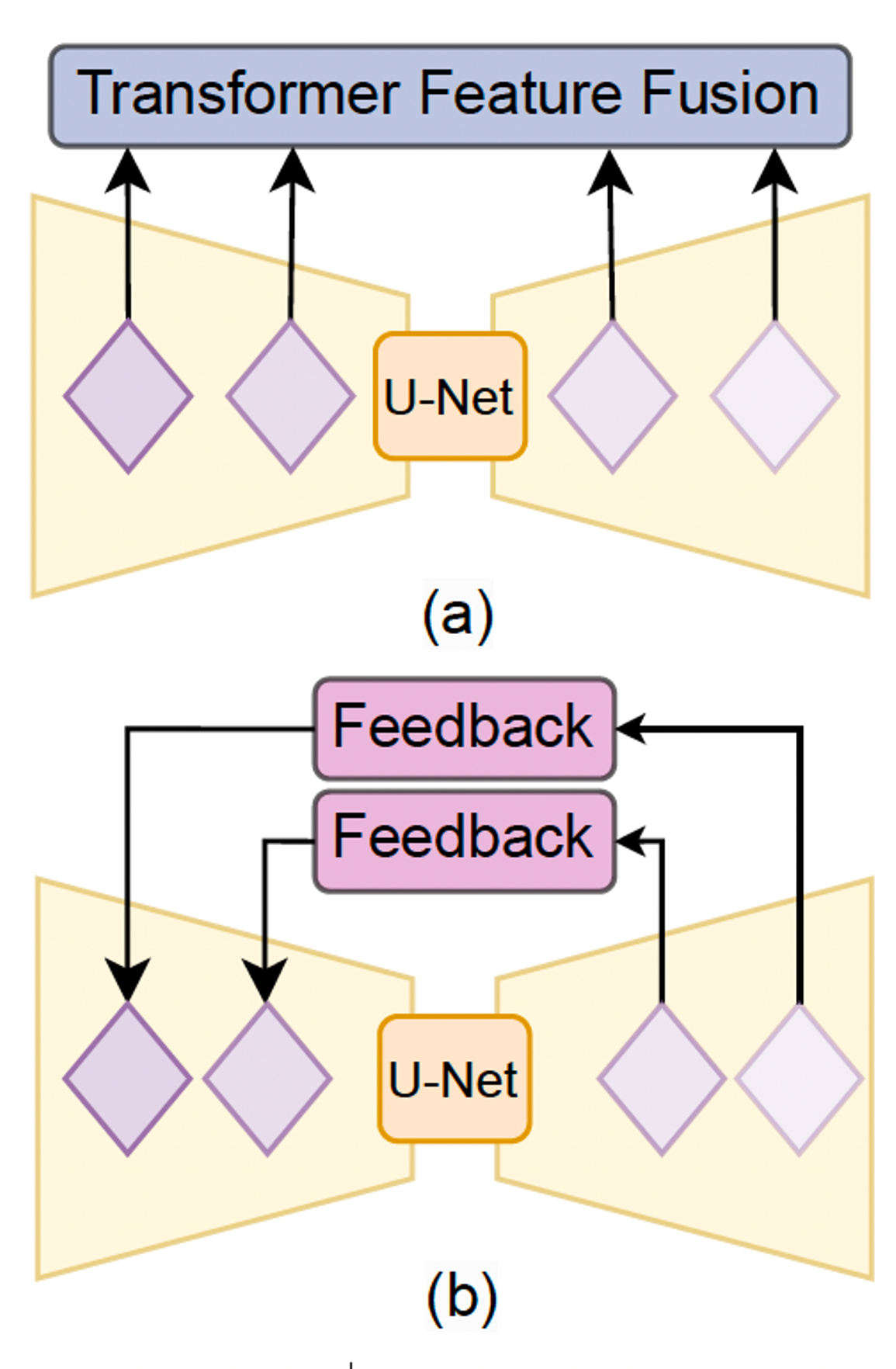

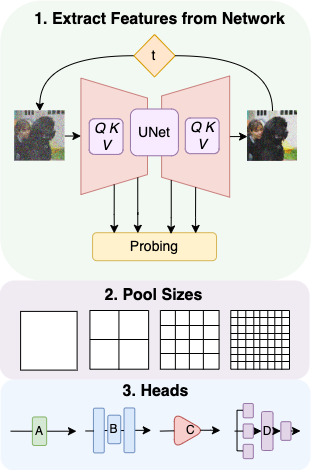

While many unsupervised learning models focus on one family of tasks, either generative or discriminative, we explore the possibility of a unified representation learner: a model which addresses both families of tasks simultaneously. We identify diffusion models, a state-of-the-art method for generative tasks, as a prime candidate. Such models involve training a U-Net to iteratively predict and remove noise, and the resulting model can synthesize high-fidelity, diverse, novel images. We find that the intermediate feature maps of the U-Net are diverse, discriminative feature representations. We propose a novel attention mechanism for pooling feature maps and further leverage this mechanism as DifFormer, a transformer feature fusion of features from different diffusion U-Net blocks and noise steps. We also develop DifFeed, a novel feedback mechanism tailored to diffusion. We find that diffusion models are better than GANs, and, with our fusion and feedback mechanisms, can compete with state-of-the-art unsupervised image representation learning methods for discriminative tasks - image classification with full and semi-supervision, transfer for fine-grained classification, object detection and segmentation, and semantic segmentation.

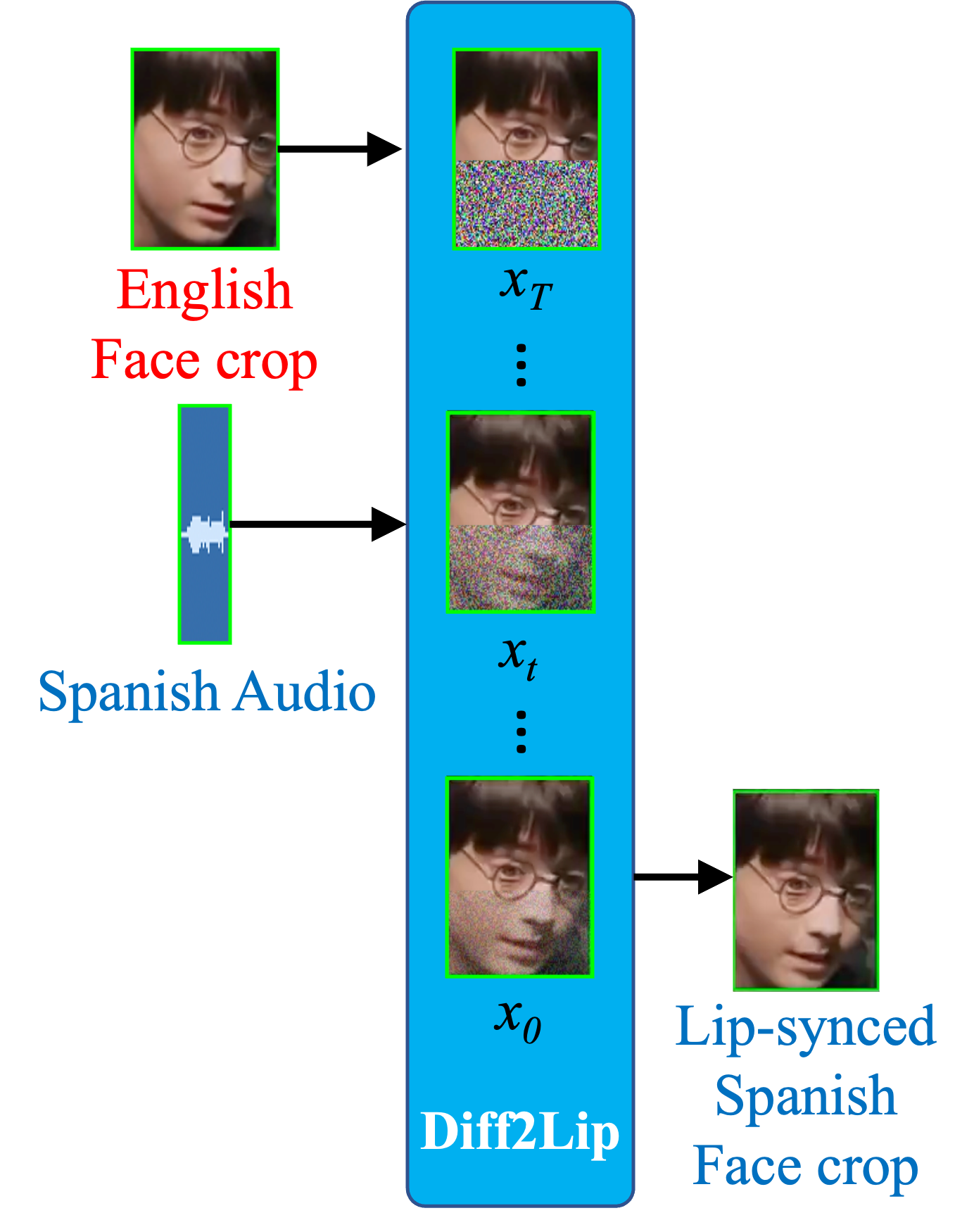

Diff2Lip: Audio Conditioned Diffusion Models for Lip-SynchronizationIn Proceedings of the IEEE/CVF Winter Conference on Applications of Computer Vision (WACV), Jan 2024

Diff2Lip: Audio Conditioned Diffusion Models for Lip-SynchronizationIn Proceedings of the IEEE/CVF Winter Conference on Applications of Computer Vision (WACV), Jan 2024 Diffusion Models Beat GANs on Image ClassificationarXiv preprint, Jan 2023

Diffusion Models Beat GANs on Image ClassificationarXiv preprint, Jan 2023